-

On this page

Top five takeaways

- Developers must take reasonable steps to ensure accuracy in generative AI models, commensurate with the likely increased level of risk in an AI context, including through using high quality datasets and undertaking appropriate testing. The use of disclaimers to signal where AI models may require careful consideration and additional safeguards for certain high privacy risk uses may be appropriate.

- Just because data is publicly available or otherwise accessible does not mean it can legally be used to train or fine-tune generative AI models or systems. Developers must consider whether data they intend to use or collect (including publicly available data) contains personal information, and comply with their privacy obligations. Developers may need to take additional steps (e.g. deleting information) to ensure they are complying with their privacy obligations.

- Developers must take particular care with sensitive information, which generally requires consent to be collected. Many photographs or recordings of individuals (including artificially generated ones) contain sensitive information and therefore may not be able to be scraped from the web or collected from a third party dataset without establishing consent.

- Where developers are seeking to use personal information that they already hold for the purpose of training an AI model, and this was not a primary purpose of collection, they need to carefully consider their privacy obligations. If they do not have consent for a secondary, AI-related purpose, they must be able to establish that this secondary use would be reasonably expected by the individual, taking particular account of their expectations at the time of collection, and that it is related (or directly related, for sensitive information) to the primary purpose or purposes (or another exception applies).

- Where a developer cannot clearly establish that a secondary use for an AI-related purpose was within reasonable expectations and related to a primary purpose, to avoid regulatory risk they should seek consent for that use and/or offer individuals a meaningful and informed ability to opt-out of such a use.

Quick reference guide

- The Privacy Act applies to the collection, use and disclosure of personal information to train generative AI models, just as it applies to all uses of AI that involve personal information.

- Developers using large volumes of information to train generative AI models should actively consider whether the information includes personal information, particularly where the information is of unclear provenance. Personal information includes inferred, incorrect or artificially generated information produced by AI models (such as hallucinations and deepfakes), where it is about an identified or reasonably identifiable individual.

- A number of uses of AI are low-risk. However, developing a generative AI model is a high privacy risk activity when it relies on large quantities of personal information. As for many uses of AI, this is a source of significant community concern. Generative AI models have unique and powerful capabilities, and many use cases can pose significant privacy risks for individuals as well as broader ethical risks and harms.

- For these reasons, the OAIC (like the Australian community) expects developers to take a cautious approach to these activities and give due regard to privacy in a way that is commensurate with the considerable risks for affected individuals. Developers should particularly consider APPs 1, 3, 5, 6 and 10 in this context.

- Other APPs, such as APPs 8, 11, 12 and 13 are also relevant in this context, but are outside the scope of this guidance. This guidance should therefore be considered together with the Privacy Act 1988 (Privacy Act) and the Australian Privacy Principles guidelines.

Privacy by design

- Developers should take steps to ensure compliance with the Privacy Act, and first and foremost take a ‘privacy by design’ approach when developing or fine-tuning generative AI models or systems, including conducting a privacy impact assessment.

- AI technologies and supply chains can be complex and steps taken to remove or de-identify personal information may not always be effective. Where there is any doubt about the application of the Privacy Act to specific AI-related activities, developers should err on the side of caution and assume it applies to avoid regulatory risk and ensure best practice.

Accuracy

- Generative AI systems are known to produce inaccurate or false results. It is important to remember that generative AI models are probabilistic in nature and do not ‘understand’ the data they handle or generate.

- Under APP 10, developers have an obligation to take reasonable steps to ensure the personal information collected, used and disclosed is accurate. Developers must consider this obligation carefully and take reasonable steps to ensure accuracy, commensurate with the likely increased level of risk in an AI context, including through using high quality datasets, undertaking appropriate testing and the appropriate use of disclaimers.

- In particular, disclaimers should clearly communicate any limitations in the accuracy of the generative AI model, including whether the dataset only includes information up to a certain date, and should signal where AI models may require careful consideration and additional safeguards for certain high privacy risk uses, for example use in decisions that will have a legal or similarly significant effect on an individual’s rights.

Transparency

- To ensure good privacy practice, developers should also ensure they update their privacy policies and notifications with clear and transparent information about their use of AI generally.

Collection

- Just because data is publicly available or otherwise accessible does not mean it can legally be used to train or fine-tune generative AI models or systems. Developers must consider whether data they intend to use or collect (including publicly available data) contains personal information, and comply with their privacy obligations.

- Developers must only collect personal information that is reasonably necessary for their functions or activities. In the context of compiling a dataset for generative AI they should carefully consider what data is required to draft appropriate parameters for whether data is included and mechanisms to filter out unnecessary personal information from the dataset.

- Developers must take particular care with sensitive information, which generally requires consent to be collected. Many photographs or recordings of individuals (including artificially generated ones) contain sensitive information and therefore may not be able to be scraped from the web or collected from a third party dataset without establishing consent.

- Where sensitive information is inadvertently collected without consent, it will generally need to be destroyed or deleted from a dataset.

- Developers must collect personal information only by lawful and fair means. Depending on the circumstances, the creation of a dataset through web scraping may constitute a covert and therefore unfair means of collection.

- Where a third party dataset is being used, developers must consider information about the data sources and compilation process for the dataset. They may need to take additional steps (e.g. deleting information) to ensure they are complying with their privacy obligations.

Use and disclosure

- Where developers are seeking to use personal information that they already hold for the purpose of training an AI model, and this was not a primary purpose of collection, they need to carefully consider their privacy obligations.

- Where developers do not have consent for a secondary, AI-related purpose, they must be able to establish that this secondary use would be reasonably expected by the individual, taking particular account of their expectations at the time of collection, and that it is related (or directly related, for sensitive information) to the primary purpose or purposes (or another exception applies).

- Whether a secondary use is within reasonable expectations will always depend on the particular circumstances. However, given the unique characteristics of AI technology, the significant harms that may arise from its use and the level of community concern around the use of AI, in many cases it will be difficult to establish that such a secondary use was within reasonable expectations.

- Where a developer cannot clearly establish that a secondary use for an AI-related purpose was within reasonable expectations and related to a primary purpose, to avoid regulatory risk they should seek consent for that use and offer individuals a meaningful and informed ability to opt-out of such a use.

Downloads

Updated: 21 October 2024Overview

Who is this guidance for?

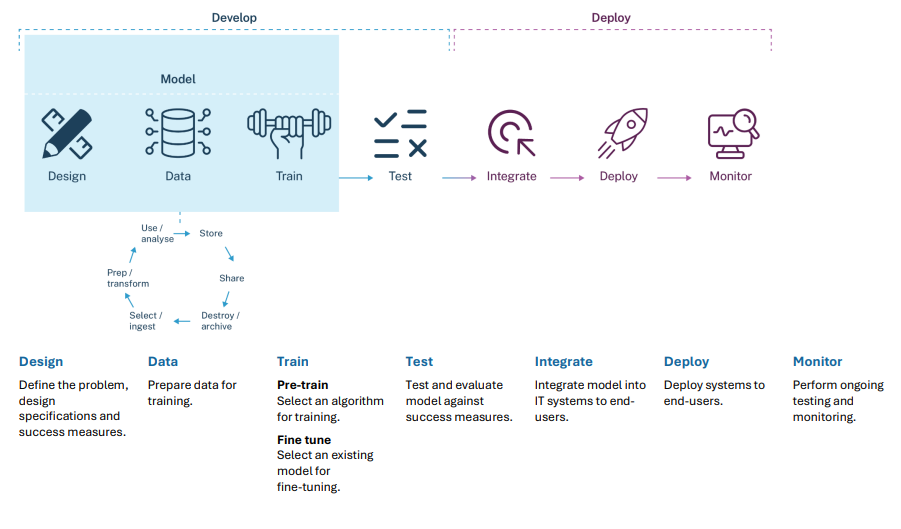

This guidance is intended for developers of generative artificial intelligence (AI) models or systems who are subject to the Privacy Act (see ‘When does the Privacy Act apply?’ below).[1] A developer includes any organisation who designs, builds, trains, adapts or combines AI models and applications.[2] This includes adapting through fine-tuning, which refers to modifying a trained AI model (developed by them or someone else) with a smaller, targeted fine-tuning dataset to adapt it to suit more specialised use cases.[3]

The guidance also addresses where an organisation provides personal information to a developer so they can develop or fine-tune a generative AI model.

Although this guidance has been prepared with specific reference to generative AI training activities, a number of the risks and issues discussed are also applicable to narrow AI systems or models that are trained using personal information. Developers of any kind of AI model that involves personal information will find the guidance helpful when considering the privacy issues that arise.

The Privacy Act applies to organisations that are APP entities developing generative AI or fine-tuning commercially available models for their purposes. It also applies to acts or practices engaged in outside Australia by organisations with an Australian link, such as where they are incorporated in Australia or they carry on business in Australia. Foreign companies carrying on business in Australia, such as digital platforms operating in Australia, will find this guidance useful.

For simplicity, this guidance generally refers to ‘developing’ or ‘training’ a generative AI model to refer to both initial development and fine-tuning, even where these two practices may be done by different entities.

This guidance does not address considerations during testing or deploying or otherwise making a generative AI model or system available for use. The OAIC has separate guidance on privacy and the use of commercially available AI products. The OAIC may also provide further statements or guidance in the future.

Can developers use personal information to develop a generative AI model?

Whether the use of personal information to develop a generative AI model will contravene the Privacy Act depends on the circumstances, such as how the personal information was collected, for what purpose it was collected and whether it includes sensitive information. Developers should consider the obligations and considerations set out in this Guide to determine whether their use of personal information to develop a generative AI model is permitted under the Privacy Act.

How to use this guidance

This guidance is not intended to be a comprehensive overview of all relevant privacy risks and obligations that apply to developing generative AI models. It focuses on APPs 1, 3, 5, 6 and 10 in the context of planning and designing generative AI, and compiling a dataset for training or fine-tuning a generative AI model.

This guidance includes practices that developers that are APP entities must follow in order to comply with their obligations under the Privacy Act as well as good privacy practices for developers when developing and training models. Where something is a matter of best practice rather than a clear legal requirement, this will be presented as an OAIC recommendation or suggestion.

This guidance is only about privacy considerations and the Privacy Act 1988 (Cth). It does not address considerations from other regimes that may apply to developing and fine-tuning AI models.[4]

This guidance does not have to be read from start to finish – you can use the topic headings to navigate to the sections of interest to you. Most sections conclude with a list of practical tips, which draw together the key learnings from each section. We have also included case studies and examples in each section to illustrate the way that the APPs may apply.

Finally, there is a Quick Reference Guide and Checklist that highlights key privacy considerations when planning and designing a generative AI model, and collecting and processing a generative AI training dataset.

Introductory terms

This guidance is about generative AI models or systems.

While there is no single agreed definition of AI, in this guidance AI refers to ‘a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.’[5] An AI model is the ‘raw, mathematical essence that is often the ‘engine’ of AI applications’ such as GPT-4, while an AI system is ‘the ensemble of several components, including one or more AI models, that is designed to be particularly useful to humans in some way’ such as the ChatGPT app.[6]

There are many different kinds of AI.[7] This guidance focuses on generative AI, which refers to ‘an AI model with the capability of learning to generate content such as images, text, and other media with similar properties to its training data’ and systems built on such models. [8] Developing a generative AI model is a high privacy risk activity when it relies on large quantities of personal information.

Large language models (LLMs) and multimodal foundation models (MFMs) are both examples of generative AI. An LLM is ‘a type of generative AI that specialises in the generation of human-like text’. [9] Some examples of products or services incorporating LLMs are Meta AI Assistant, ChatGPT, Microsoft Copilot and HuggingChat.

An MFM is ‘a type of generative AI that can process and output multiple data types (e.g. text, images, audio)’.[10] Some examples of products or services incorporating MFMs that are image or video generators include DALL-E 3, Firefly, Jasper Art, Synthesia, Midjourney and Stable Diffusion. Some examples of products or services incorporating MFMs that are audio generators include Riffusion, Suno, Lyria and AudioCraft.

Generative AI models are trained on the relationship between inputs, using this to identify probabilistic relationships between data that they use to generate responses.[11] This can have implications for their accuracy (discussed further below).

Artificial intelligence and privacy

Artificial intelligence (AI) has the potential to benefit the Australian economy and society, by improving efficiency and productivity across a wide range of sectors and enhancing the quality of goods and services for consumers. However, the data-driven nature of AI technologies, which rely on large datasets that often include personal information, can also create new specific privacy risks, amplify existing risks and lead to serious harms.[12] These AI-specific risks and harms are considered in further detail in the section below.

The Privacy Act 1988 and the Australian Privacy Principles (APPs) apply to all uses of AI involving personal information, including where information is used to train, test or use an AI system. APP entities need to understand their obligations under the APPs when developing generative AI models. This includes being aware of the different ways that they may be collecting, using and disclosing personal information when developing a generative AI model.

What is personal information?

Personal information includes a broad range of information, or an opinion, that could identify an individual. This may include information such as a person’s name, contact details and images or videos where a person is identifiable. What is personal information will vary, depending on whether a person can be identified or is reasonably identifiable in the circumstances. Personal information is a broad concept and includes information which can reasonably be linked with other information to identify an individual.

Sensitive information is a subset of personal information that is generally afforded a higher level of privacy protection. Examples of sensitive information include photographs or videos where sensitive information such as race or health information can be inferred, as well as information about an individual’s political opinions or religious or philosophical beliefs.

Importantly, information can be personal information whether or not it is true. This may include false information generated by an AI system, such as hallucinations or deepfakes.[13]

When does the Privacy Act apply?

The Privacy Act applies to Australian Government agencies, organisations with an annual turnover of more than $3 million, and some other organisations.[14] Importantly, the Privacy Act applies to acts or practices engaged in outside Australia by organisations with an Australian link, such as where they are incorporated in Australia or they carry on business in Australia.[15]

Whether a developer carries on business in Australia can be determined by identifying what transactions make up or support the business and asking whether those transactions or the transactions ancillary to them occur in Australia.[16] By way of example, developers whose business is providing digital platform services to Australians will generally be carrying on business in Australia.

Interaction with Voluntary AI Safety Standard

The National AI Centre has developed a Voluntary AI Safety Standard to help organisations develop and deploy AI systems in Australia safely and reliably. The standard consists of 10 voluntary guardrails that apply to all organisations across the AI supply chain. It does not seek to create new legal obligations, but rather helps organisations deploy and use AI systems in accordance with existing Australian laws. The information in this guidance is focussed on compliance with the Privacy Act, but will also assist organisations in addressing the guardrails in the Standard. For more information, see: www.industry.gov.au/publications/voluntary-ai-safety-standard

Privacy considerations when planning and designing an AI model or system

Privacy by design (APP 1)

Developers subject to the Privacy Act must take reasonable steps to implement practices, procedures and systems that will ensure they comply with the APPs and any binding registered APP code, and are able to deal with related inquiries and complaints.[17] When developing or fine-tuning a generative AI model, developers should consider the potential risks at the planning and design stage through a ‘privacy by design’ approach.

Privacy by design is a process for embedding good privacy practices into the design specifications of technologies, business practices and physical infrastructures.[18]

To mitigate risks, developers first need to understand them. A privacy impact assessment (PIA) is one way to do this for privacy risks. It is a systematic assessment of a project that identifies the impact that the project might have on the privacy of individuals, and sets out recommendations for managing, minimising or eliminating that impact. While PIAs assess a project’s risk of non-compliance with privacy legislation, a best practice approach considers the broader privacy implications and risks beyond compliance, including whether a planned use of personal information will be acceptable to the community.[19]

Some privacy risks that may be relevant in the context of generative AI include the following:

- Individuals losing control over their personal information: Technologies such as generative AI are trained on large amounts of public data, including the personal information of individuals, which is likely to be collected without their knowledge and consent.[20] It can be difficult for individuals to identify when their personal information is used in AI systems and to request the correction or deletion of this information.

- Bias and discrimination: As AI systems learn from source data which may contain inherent bias, this bias may be replicated in their outputs through inferences made based on gender, race or age and have discriminatory effects.[21] AI outputs can often appear credible even when they produce errors or false information.

- Other inaccuracies: Issues in relation to accuracy or quality of the training data (including as a result of data poisoning)[22] and the predictive nature of generative AI models can lead to outputs that are inaccurate but appear credible. [23] Feedback loops can cause the accuracy and reliability of an AI model to degrade over time.[24] Inaccuracies in output can have flow on consequences that depend on the context, including reputational harm, misinformation or unfair decisions.

- Lack of transparency: AI can make it harder for entities to manage personal information in an open and transparent way, as it can be difficult for entities to understand and explain how personal information is used and how decisions made by AI systems are reached.[25]

- Re-identification: The use of aggregated data drawn from multiple datasets also raises questions about the potential for individuals to be re-identified through the use of AI and can make it difficult to de-identify information in the first place.[26]

- Misuse of generative AI systems: The capabilities of generative AI models can be misused through malicious actors building AI systems for improper purposes, or the AI model or end users of AI systems misusing them, with potential impacts on individual privacy or broader negative consequences including through:[27]

- Generating disinformation at scale, such as though deepfakes

- Scams and identity theft

- Generating harmful or illegal content, such as image-based abuse, which can be facilitated through the accidental or unintended collection and use of harmful or illegal material, such as child sexual abuse material, to train AI systems[28]

- Generating harmful or malicious code that can be used in cyber attacks or other criminal activity.[29]

- Risk of disclosure of personal information through a data breach involving the training dataset or through an attack on the model: The vast amounts of data collected and stored by generative AI may increase the risks related to data breaches, especially when individuals disclose particularly sensitive data in their conversations with generative AI chatbots because they are not aware it is being retained or incorporated into a training dataset.[30] This could be through unauthorised access to the training dataset or through attacks designed to make a model regurgitate its training dataset.[31]

A developer may find it difficult to assess the privacy impacts of an AI model or system before developing it as it may not have all the information it requires to make a fulsome assessment. This is particularly so where the AI model developed is general purpose, where the purpose can be quite broad. For this reason:

- developers should consider the privacy risks through the PIA process to the extent possible early, including by reference to general risks

- PIAs should be an ongoing process so that as more information is known developers can respond to any changing risks

- it is important for developers that are fine-tuning models and deployers to consider any additional privacy risks from the intended use.[32]

Where developers build general purpose AI systems or structure their AI systems in a way that places the obligation on downstream users of the system to consider privacy risks, the OAIC suggests they provide any information or access necessary for the downstream user to assess this risk in a way that enables all entities to comply with their privacy obligations. However, as a matter of best practice, developers should err on the side of caution and assume the Privacy Act applies to avoid regulatory risk.

Practical tips - privacy by design

Take a privacy-by-design approach early in the planning process.

Conduct a PIA to identify the impact that the project might have on the privacy of individuals and then take steps to manage, minimise or eliminate that impact.

Accuracy when training AI models (APP 10)

Accuracy risks for generative AI models

Generative AI models carry inherent risks as to their accuracy due to the following factors:

- They are often trained on huge amounts of data sourced from across the internet, which is highly likely to include inaccuracies and be impacted by unfounded biases.[33] The models can then perpetuate and amplify those biases in their outputs.

- The probabilistic nature of generative AI (in which the next word, sub-word, pixel or other medium is predicted based on likelihood) and the way it tokenises input can generate hallucinations. For example, without protective measures an LLM asked how many ‘b’s are in banana will generally state there are two or three ‘b’s in banana as the training data is weighted with instances of people asking how many ‘a’s or ‘n’s are in banana and because of the way it tokenises words not letters.[34]

- The accuracy and reliability of an AI model is vulnerable to deterioration over time. This can be caused by the accumulation of errors and misconceptions across successive generations of training, or by a model’s development on training data obtained up to a certain point in time, which eventually becomes outdated.[35]

- An LLM’s reasoning ability declines when they encounter a scenario or task that differs to what is in their training data.[36]

These risks can be compounded by the tendency of generative AI tools to confidently produce outputs which appear credible, regardless of their accuracy.

Privacy obligations regarding accuracy

Developers of generative AI models must be aware of these risks and how they may impact on their ability to comply with privacy obligations. In particular, APP 10 requires developers to take reasonable steps to ensure that:

- the personal information it collects is accurate, up-to-date and complete; and

- the personal information it uses and discloses is accurate, up-to-date, complete and relevant, having regard to the purpose of the use or disclosure.

The reasonable steps that a developer must take will depend on circumstances that include the sensitivity of the personal information, the nature of the developer, and the possible adverse consequences for an individual if the quality of personal information is not ensured.[37] In the context of generative AI, there will be a strong link to the intended purpose of the AI model. For example, there is a lesser privacy risk associated with an AI system intended to generate songs than one that will be used to summarise key points for insurance claims to allow assessors to assess a claim from the key points. Particular care should be taken where generative AI systems will be used for high privacy risk uses, for example use in decisions that will have a legal or similarly significant effect on an individual’s rights. In these circumstances careful consideration should be given to the development of the model and more extensive safeguards will be appropriate.

How a developer intends the outputs to be used will also be relevant. If the developer does not intend for the output to be treated as factually accurate information about an individual but instead as probabilistic guesses about something that may be true, 100% statistical accuracy may not be required. However, the developer will need to clearly communicate the limits and intended use of the model. Examples of reasonable steps to ensure accuracy are provided below.

Practical tips - examples of reasonable steps to ensure accuracy

Developers should consider the reasonable steps they will need to take to ensure accuracy at the planning stage. The reasonable steps will depend on the circumstances but may require the developer to:

- Take steps to ensure the training data, including any historical information, inferences, opinions, or other personal information about individuals, having regard to the purpose of training the model, is accurate, factual and up-to-date.

- Understand and document the impact that the accuracy of the training data has on the generative AI model outputs.

- Clearly communicate any limitations in the accuracy of the generative AI model or guidance on appropriate usage, including whether the dataset only includes information up to a certain date, and through the use of appropriate disclaimers.

- Have a process by which a generative AI system can be updated if they become aware the information used for training or being output is incorrect or out-of-date.

- Implement diverse testing methods and measures to assess and mitigate risk of biased or inaccurate output prior to release.

- Implement a system to tag content as AI-generated, for example, the use of watermarks on images or video content.

- Consider what other steps are needed to address the risk of inaccuracy such as fine-tuning, allowing AI systems built on the generative AI model to access and reference knowledge databases when asked to perform tasks to help improve its reliability, restricting user queries, using output filters or implementing accessible reporting mechanisms that enable end-users to provide feedback on any inaccurate information generated by an AI system.[38]

Privacy considerations when collecting and processing the training dataset

Generative AI requires large datasets to train the model. In addition, more targeted datasets may be used to fine-tune a model. However, just because data is accessible does not automatically mean that it can be used to train generative AI models. The collection and use of data for model training purposes can present substantial privacy risks where personal information or sensitive information is handled in this process. As outlined above, the Privacy Act regulates the handling of personal information by APP entities, and will apply when a dataset contains personal information. It is therefore important for developers to ensure they comply with privacy laws.

As an initial step, developers need to actively consider whether the dataset they intend to use for training a generative AI model is likely to contain personal information. It is important to consider the data in its totality, including the data,[39] the associated metadata, and any annotations, labels or other descriptions attributed to the data as part of its processing. Developers should be aware that information that would not be personal information by itself may become personal information in combination with other information.[40]

If there is a risk the dataset contains some personal information, developers must consider whether their collection of the dataset complies with privacy law or take appropriate steps to ensure there is no personal information in the dataset. This section sets out considerations for different methods of compiling a dataset:

- Data scraping (see ‘Collection obligations (APP 3)’): Data scraping generally involves the automated extraction of data from the web, for example collecting profile pictures or text from social media sites. This may arise in a range of circumstances, including where a developer uses web crawlers to collect data or where they follow a series of links in a third party dataset and download or otherwise collect the data from the linked websites.

- Collecting a third party dataset (see ‘Collection obligations (APP 3)’): Another common source of data for training is datasets collected by third parties. This includes licensed datasets collected by data brokers, datasets compiled by universities and freely available datasets compiled from scraped or crawled information (e.g. Common Crawl).

- Using a dataset you (or the organisation you are developing the model for) already hold (see ‘Use and disclosure obligations (APP 6)’).

Using de-identified information

Developers using de-identified information will need robust de-identification governance processes.[41] As part of this, developers should be aware that de-identification is context dependent and may be difficult to achieve. In addition, developers seeking to use de-identified information to train generative AI models should be aware of the following:

- De-identifying personal information is a use of the personal information for a secondary purpose.[42]

- If a developer collects information about identifiable individuals with the intention of de‑identifying it before model training, that is still a collection of personal information and the developer needs to comply with their obligations when collecting personal information under the Privacy Act (including deleting sensitive information).

Data minimisation when collecting and processing datasets

The Privacy Act requires developers to collect or use only the personal information that is reasonably necessary for their purposes.[43] An important aspect of considering what is ‘reasonably necessary’ is first specifying the purpose of the AI model. This should be a current purpose rather than collecting information for a potential, undefined future AI product. Once the purpose is established, developers should consider whether they could train the AI model without collecting or using the personal information, by collecting or using a lesser amount (or fewer categories) of personal information or by de-identifying the personal information.

Example – what personal information is reasonably necessary?

The purpose of the AI model or eventual AI system will inform what information needs to be included in the training dataset. For example, a generative AI model being developed to analyse brain scans and prepopulate a preliminary report will likely need examples of brain scans and their associated medical reports to be included in the training data, but consideration should be given to whether other information, for example details identifying the patient in the metadata or annotations, can be removed.

Practical tips – ways to minimise personal information

When training a generative AI model, developers can minimise the personal information they are using by:

- limiting the information at the collection stage through collection criteria such as:

- ensuring that certain sources are excluded from the collection criteria, such as public social media profiles, websites that are known to contain large amounts of personal information or websites that collect sensitive information by nature.

- excluding certain categories of data from collection criteria to prevent the collection of personal information or sensitive information.

- limiting collection through other criteria such as time periods.

- limiting annotations to what is necessary to train the model.

- removing or ‘sanitising’ personal information after it has been collected, but before it is used for model training purposes. This can occur through using a filter or other process to identify and remove personal information from the dataset.

The OAIC notes that these privacy-enhancing tools and technologies, including de-identification techniques, can be helpful risk-reduction strategies and assist in complying with APP 3.

However, as a matter of best practice developers should still err on the side of caution and treat data as personal information where there is any doubt to avoid regulatory risk. Even if a developer uses collection criteria and data sanitisation techniques it will still need to consider compliance with its other obligations under the Privacy Act.

Collection obligations (APP 3)

Collecting data through data scraping

The Privacy Act requires personal information to be collected directly from the individual unless it is unreasonable or impracticable to do so.[44] As scraped data is not collected directly from the individual, developers will need to consider whether their collection of personal information meets this test.

Personal information must also be collected by lawful and fair means. Examples of collections that are unlawful include collecting information in breach of legislation, that would constitute a civil wrong or would be contrary to a court or tribunal order.[45] Fairness is an open-textured and evaluative criterion. A fair means of collection is one that does not involve intimidation or deception, and is not unreasonably intrusive. Generally, it will be unfair to collect personal information covertly without the knowledge of the individual, although this will depend on the circumstances.[46]

Given the challenges for robust notice and transparency measures (discussed further below), the creation of a dataset through scraped data is generally a covert method of collection.[47] Whether this renders the collection unfair will depend on the circumstances such as:

- what the individual would reasonably expect;

- the sensitivity of the personal information;

- the intended purpose of the collection, including the intended operation of the AI model;

- the risk of harm to individuals as a result of the collection;

- whether the information being collected was intentionally made public by the individual that the information is about (in contrast to the individual who posted or otherwise published the information online); and

- the steps the developer will take to prevent privacy impacts, including mechanisms to delete or de-identify personal information or provide individuals with control over the use of their personal information.[48]

Caution – Developers should not assume information posted publicly can be used to train models

Developers should not assume that the collection of information that has been made public on the internet complies with APP 3.5. Personal information that is publicly available may have been made public by a third party, and even if it was made public by the individual themselves, they may not expect it to be collected and used to train an AI model, and therefore the collection of it for the purposes of training generative AI may not meet the requirements of APP 3.5.

Sensitive information

Further protections apply to sensitive information under the Privacy Act, which developers must not collect without consent unless an exception applies.

What is sensitive information?

Sensitive information is any biometric information to be used for the purposes of automated biometric verification or biometric identification, biometric templates, health information about an individual, genetic information about an individual or personal information about an individual for certain topics such as racial or ethnic origin, political opinions or sexual orientation.[49]

Information may be sensitive information where it clearly implies one of these matters.[50] For example, an image of a person is sensitive information where it implies one of the categories of sensitive information or will be used for automated identification. Labels or annotations assigned to images that include sensitive information such as race or whether someone has a disability will also be sensitive information.

If no exceptions apply, developers require valid consent to collect sensitive information under the APPs.[51] When developers scrape data, there are challenges with obtaining valid consent to the collection of sensitive information. In particular, consent under the Privacy Act must be express or implied consent.[52] Express consent is given explicitly, and implied consent may reasonably be inferred in the circumstances of the individual.[53] Generally developers should not assume that an individual has given consent on the basis that they did not object to a proposal to handle information in a particular way.[54] For example, a failure of a website to implement measures to prevent data scraping should not be taken as implied consent. Developers must also be conscious that information on publicly accessible websites may have been uploaded by a different person to the individual the information is about.

If consent cannot be obtained and no exception applies, developers must delete sensitive information from the dataset.

Example – consent when scraping profile pictures from social media

A developer collects public profile pictures from a social media website. The profile pictures are of sufficient quality to allow the individual/s in the pictures to be recognised and to allow inferences to be made about race or medical conditions due to physical characteristics displayed in the picture. The developer will need to have consent to collect the images unless another exception to APP 3.3. applies.

Practical tips – checks when collecting through data scraping

Developers collecting a data set through data scraping should:

- consider whether personal or sensitive information is in the data to be collected.

- consider the data minimisation techniques set out earlier, including whether personal information collected can be de-identified.

- once the data is collected, consider whether any unnecessary data can be deleted.

- determine whether they have valid consent for the collection of sensitive information or delete sensitive information.

- consider whether their means of collection is lawful and fair based on the circumstances.

- consider whether they could collect the data directly from individuals rather than indirectly from third party websites.

- consider what changes to privacy policies and collection notices are needed to comply with their notice and transparency obligations (see section on Notice and transparency obligations).

Collecting a third party dataset

When developers obtain a third party dataset that contains personal information, this will generally constitute a collection of personal information by the developer from the third party. As such, developers must consider whether they are complying with their privacy obligations in collecting and using this information.[55] Similar considerations to those specified for data scraping will be relevant. The circumstances of how the dataset was collected will be important to consider in this context. In particular:

- the steps the third party took when collecting the information to inform individuals that it would provide their personal information to others for them to use to train generative AI models will impact whether the collection by the developer is lawful and fair; and

- whether the third party obtained valid consent on behalf of the developer for the collection of sensitive information will be relevant to whether the developer can collect sensitive information or must delete it from the dataset.

In addition to deleting sensitive information from the dataset, developers may need to de-identify personal information prior to using the dataset.

Where possible, the OAIC recommends that developers seek information or assurances from third parties in relation to the dataset including through:

- contractual terms that the collection of personal information and its disclosure by the third party for the purposes of the developer training a generative AI model does not breach the Privacy Act 1988 (Cth), or, where the third party is not subject to the Privacy Act, would not do so if they were subject to the Privacy Act;

- requesting information about the source of any personal information; and

- requesting copies of what information was provided to individuals about how their personal information would be handled.

Where datasets of scraped or crawled content are made publicly available by a third party it may not be possible to amend the terms and conditions attached to the use of that data to require compliance with the Privacy Act. Where this is the case, developers must carefully consider the information provided about the data sources and compilation process for the dataset in the context of the privacy risks associated with data scraping set out in the previous section. As set out above, it may be necessary to delete sensitive information and delete or de-identify personal information prior to using the dataset.

Practical tips – checks when collecting a third party dataset

Developers collecting a third party data set should:

- consider whether personal or sensitive information is in the data to be collected.

- consider the data minimisation techniques set out earlier, including to narrow the personal information they collect to what is reasonably necessary to train the model.

- seek and consider information about the circumstances of the original collection, including its data sources and notice provided.

- if possible, seek assurances from the third party in relation to the provenance of the data and circumstances of collection.

- consider whether their means of collection is lawful and fair based on the circumstances.

- consider whether the data could be collected directly from the individual rather than from a third party.

- consider whether they have valid consent to collect any sensitive information – if they do not and another exception does not apply they will need to delete it.

- consider what changes to their privacy policies and collection notices (or the third party’s collection notices) are needed to comply with their notice and transparency obligations (see section on notice and transparency).

If the developer cannot establish their collect and use complies with their privacy obligations it may be necessary to delete sensitive information and delete or de-identify personal information prior to using the dataset to manage privacy risk.

Use and disclosure obligations (APP 6)

Developers may wish to use personal information they already hold to train a generative AI model, such as information they collected through operating a service, interactions with an AI system or a dataset they compiled for training an earlier AI model. However, before they do so, developers will need to consider whether that use is permitted under the Privacy Act. The considerations for reuse of data will also be relevant where an organisation is providing information to a developer to build a model for them.

When is information publicly available on a website ‘held’?

A developer, or organisation providing information to a developer, holds personal information if it has possession or control of a record that contains the personal information.[56] For the purposes of the Privacy Act, a record does not include generally available publications.[57] However, a website is not a generally available publication merely because anybody can access it. Instead, this will depend on consideration of a range of factors, including the circumstances, the nature of the information, the prominence of the website, the likelihood of the access and the steps needed to obtain that access.[58] Developers and organisations providing information to developers should exercise caution when claiming they do not hold personal information, due to it being available on a public facing website.

Using or disclosing personal information for a secondary, AI-related purpose

Organisations, including developers, can only use personal information or disclose it to third parties for the primary purpose or purposes for which it was collected unless they have consent or an exception applies.[59] The primary purpose or purposes are the specific functions or activities for which particular personal information is collected.[60] For example, where information is collected for the purpose of providing a particular service such as a social media service, providing that specific social media service will be the primary purpose even where the provider of the service has additional secondary purposes in mind.

Consent

Consent under the Privacy Act means express or implied consent and has the following four elements:

- the individual is adequately informed before giving consent;

- the individual gives consent voluntarily;

- the consent is current and specific; and

- the individual has the capacity to understand and communicate their consent.[61]

In the context of training generative AI, developers and organisations providing personal information to developers may have challenges ensuring the individual is adequately informed. This is because training generative AI models is a form of complex data processing that may be difficult for individuals to understand. Developers training models and organisations providing personal information to developers should therefore consider how they can provide information in an accessible way. In addition, as consent must be voluntary, current and specific, they should not rely on a broad consent to handle information in accordance with the privacy policy, as consent for training generative AI models.[62]

Informing individuals about training generative AI models

Developers and organisations providing personal information to developers should consider what information will give individuals a meaningful understanding of how their personal information will be handled, so they can determine whether to give consent. This could include information about the function of the generative AI model, a general description of the types of personal information that will be collected and processed and how personal information is used during the different stages of developing and deploying the model.

Reasonable expectations

One of the exceptions in the Privacy Act permits personal information to be used for a secondary purpose if the individual would reasonably expect it to be used for the secondary purpose and the secondary purpose is related to a primary purpose. If the information is sensitive information, the information needs to be directly related to a primary purpose.

The ‘reasonably expects’ test is an objective one that has regard to what a reasonable person, who is properly informed, would expect in the circumstances.[63] Relevant considerations include whether, at the time the developer or organisation providing information to the developer collected the information:

- the developer or organisation providing information to the developer provided information about use for training generative AI models through an APP 5 notice, or in its APP privacy policy; and

- the secondary purpose was a well understood internal business practice.

Whether APP 5 notices or privacy policies were updated, or other information was given at a point in time after the collection may also be relevant to this assessment. It is possible for an individual’s reasonable expectations in relation to secondary uses that are related to the primary purpose to change over time. However, particular consideration should be given to the reasonable expectations at the time of collection, given this is when the primary purpose or purposes are determined. In the context of training generative AI models, updating a privacy policy or providing notice by themselves will generally not be sufficient to change reasonable expectations regarding the use of personal information that was previously collected for a different purpose.

Even if the individual would reasonably expect their information to be used for a secondary purpose, that purpose still needs to be related (for personal information) or directly related (for sensitive information) to the primary purpose. A related secondary purpose is one which is connected to or associated with the primary purpose and must have more than a tenuous link.[64] A directly related secondary purpose is one which is closely associated with the primary purpose, even if it is not strictly necessary to achieve that primary purpose.[65] This will be difficult to establish where information collected to provide a service to individuals will be used to train a generative AI model that is being commercialised outside of the service (rather than to enhance the service provided).

Practical steps for compliance

Where a developer or an organisation providing personal information to a developer cannot clearly establish that using the personal information they hold to train a generative AI model is within reasonable expectations and related to the primary purpose, they should seek consent for that use and/or offer individuals the option of opting-out of such a use. The opt-out mechanism must be accompanied by sufficient information to inform the individual about the intended use of their personal information and sufficient time to exercise the opt-out.

Example 1 – social media companies reusing historic posts

A social media company has collected posts from users on their platform since 2010 for the purpose of operating the service. In 2024 they wish to use these posts to train a LLM which they will then licence to others. They update their privacy policy to say that they use the information they hold to train generative AI and then proceed to use the personal information they hold going back to 2010 to train a generative AI model.

This use of personal information would not be permitted where the association with the original purpose of collection is too tenuous and the individuals would not have reasonably expected the information they provided in the past to be used to train generative AI. In these circumstances, the social media company could consider de-identifying the information before using it, or taking appropriate steps to seek consent, for example by informing impacted individuals, providing them with an easy and user-friendly mechanism to remove their posts and providing them with a sufficient amount of time to do so.

Example 2 – using prompts to a chatbot to retrain the chatbot

An AI model developer makes an AI chatbot publicly available. Prompts entered by individuals are used to provide the chatbot service. Where those prompts contain personal information, providing the chatbot service is the primary purpose of collection. The developer also wishes to use the prompts and responses to train future versions of the AI model underlying the chatbot service so it can improve over time. They provide clear information about this to individuals using the product through a sentence above the prompt input field explaining that interactions with the chatbot are used to retrain the AI model, and a more detailed explanation in their privacy policy.

As the individual was informed before using the chatbot and this use is related to the primary purpose, this secondary use of the data would likely be within users’ reasonable expectations.

Example 3 – bank providing information to a developer to develop or fine-tune an AI model for their business purposes

A bank wants to provide a developer with historic customer queries to fine-tune a generic LLM for use in a customer chatbot. The customer queries include personal information, such as names and addresses of customers. The bank should first consider whether the fine-tuning dataset needs to include the personal information or if the customer queries can be de-identified.

If the customer queries cannot be de-identified, the bank will need to consider whether APP 6 permits the customer queries to be disclosed to the third party developer. The primary purpose of collecting the personal information was to respond to the customer query. As such, the organisation will need to consider whether they have consent from the individuals the information is about, or an exception applies to use it for this secondary purpose.

To comply with APP 6, the bank provides information to its customers about its intended use of previous customer queries and provides a mechanism for its customers and previous customers to opt-out from their information being used in this way. It also:

- updates its privacy policy and collection notice to make clear that personal information will be used to fine-tune a generative AI chatbot for internal use

- agrees other protections with the developer such as filters to ensure personal information from the training dataset will not be included in outputs.

The OAIC also has guidance for entities using commercially available AI products, including considerations when selecting a commercially available AI product.

Practical tips – checks when using or disclosing personal information for a secondary, AI-related, purpose

Organisations providing information to developers and developers reusing personal information they already hold to train generative AI should:

- consider the data minimisation techniques set out earlier, including to narrow the personal information they use to what is reasonably necessary to train the model.

- consider whether the use or disclosure is for a primary purpose the personal information was collected for.

- if use or disclosure to train AI is a secondary purpose and they wish to rely on reasonable expectations:

- consider what were the reasonable expectations of the individual at the time of collection.

- consider what information was provided at the time of the collection (through APP 5 notification and the privacy policy).

- consider whether training generative AI is related (for personal information) or directly related (for sensitive information) to the primary purpose of collection.

- consider if another exception under APP 6 applies.

- if an exception does not apply, seek consent for that use and offer individuals a meaningful and informed ability to opt-out - the opt-out mechanism must be accompanied by sufficient information to inform the individual about the intended use of their personal information.

- consider what changes to their privacy policies and collection notices are needed to comply with their notice and transparency obligations (see section on notice and transparency).

Notice and transparency obligations (APP 1 and APP 5)

Regardless of how a developer compiles a dataset, they must:

- have a clearly expressed and up-to-date privacy policy about their management of personal information

- take such steps as are reasonable to notify or otherwise ensure the individual is aware of certain matters at, before or as soon as practicable after they collect the personal information.[66]

A developer’s privacy policy must contain the information set out in APP 1.4. In the context of training a generative AI model, this will generally include:

- information about the collection (whether through data scraping, a third party or otherwise) and how the data set will be held

- the purposes for which it collects and uses personal information, specifically that of training generative AI models

- how an individual may access personal information about them that is held by the developer and seek correction of such information, including an explanation of how this will work in the context of the dataset and generative AI model.

Similarly, organisations providing personal information to developers must include the information set out in APP 1.4 in their privacy policy. This will generally include the purposes for which they disclose personal information, specifically to train generative AI models.

It is important to note that generally, having information in a privacy policy is not a means of meeting the obligation to notify individuals under APP 5.[67] Transparency should be supported by both a notice under APP 5 and through the privacy policy.

The steps taken to notify individuals that are reasonable will depend on the circumstances, including the sensitivity of the personal information collected, the possible adverse consequences for the individual as a result of the collection, any special needs of the individual and the practicability of notifying individuals.[68] The sections below include considerations relevant to notice in common kinds of data collection for training AI models.

Notice in the context of data scraping

Data scraping may pose challenges for developers in relation to notification, particularly if they do not have a direct relationship with the individual or access to their contact details. Despite this, developers still need to consider what steps are reasonable in the circumstances. If individual notification is not practicable, developers should consider what other mechanisms they can use to provide transparency to affected individuals, such as making information publicly available in an accessible manner.[69]

The intention of APP 5 is to explain in clear terms how personal information is collected, used and disclosed in particular circumstances. One matter that is particularly relevant in the context of data scraping is providing information about the facts and circumstances of the collection of information. In the context of data scraping this should include information about the categories of personal information used to develop the model, the kinds of websites that were scraped, and if possible the domain names and URLs. Given the lack of transparency when information is scraped, it is good practice for developers to leave a reasonable amount of time between taking steps to inform individuals about the collection of information and training the generative AI model.

Notice in the context of third party data

Where the dataset was collected by a third party, that third party may have provided notice of relevant matters under APP 5 to the individual. The developer will need to consider what information has been provided to the individual by the third party so that the developer can assess whether it has taken such steps as are reasonable to ensure the individual is aware of relevant matters under APP 5.[70] This includes whether information was provided about the circumstances of the developer’s collection of personal information from the third party.

Notice when using personal information for a secondary purpose

As set out above, whether an APP 5 notice stated that personal information will be used for the purpose of training generative AI models at the time of collection is relevant to whether this was within an individual’s reasonable expectations. To form a reasonable expectation, this information needs to be provided in an easily accessible way that is clearly explained. Developers and organisations providing personal information to developers should explicitly refer to training artificial intelligence models rather than relying on broad or vague purposes such as research.

Practical tips – notice and transparency

Developers and organisations providing information to developers should:

- ensure their privacy policy and any APP 5 notifications are clearly expressed and up-to-date. Do they clearly indicate and explain the use of the data for AI training purposes?

- take steps to notify affected individuals or ensure they are otherwise aware that their data has been collected.

- where data scraping has been used and individual notification is not possible, consider other ways to provide transparency such as through publishing and promoting a general notification on their website.

Other considerations

This guidance focuses on compliance with APPs 1, 3, 5, 6 and 10 of the Privacy Act, but is not an exhaustive consideration of all the privacy obligations that will be relevant when developing or fine-tuning a generative AI model. The application of the Privacy Act will depend on the circumstances, but developers may also need to consider:

- how to manage overseas data flows where datasets or other kinds of personal information are disclosed overseas (APP 8);

- how they can design their generative AI model or system and keep records of where their data was sourced from in a way that enables compliance with individual rights of access and correction, and the consequences of withdrawal of consent (APPs 12 and 13); and

- how to appropriately secure training datasets and AI models, and when datasets should be destroyed or de-identified (APP 11).

Checklist – privacy considerations when developing or training an AI model

Privacy issue | Considerations |

|---|---|

Can obligations to implement practices, procedures and systems to ensure APP compliance be met? | Has a privacy by design approach been adopted? Has a privacy impact assessment been completed? |

Have reasonable steps been taken to ensure accuracy at the planning stage? | Is the training data accurate, factual and up to date considering the purpose it may be used for? What impact will the accuracy of the data have on the model’s outputs? Are the limitations of the model communicated clearly to potential users, for example through the use of appropriate disclaimers? Can the model be updated if training data becomes inaccurate or out-of-date? Have diverse testing methods or other review tools or controls been implemented to identify and remedy potential accuracy issues? Has a system to tag content been implemented (e.g. use of watermarks)? Have any other steps to address the risk of inaccuracy been taken? |

Can obligations in relation to collection be met? | Is there personal or sensitive information in the data to be collected? Could de-identified data be used, or other data minimisation techniques integrated to ensure only necessary information is collected or used? Once collected, has the data been reviewed and any unnecessary data been deleted? Is there valid consent for the collection of any sensitive information or has sensitive information been deleted? Is the means of data collection lawful and fair? Could the data be collected directly from individuals rather than from a third party? Where data is collected from a third party, has information been sought about the circumstances of the original collection (including notification and transparency measures) and have these circumstances been considered? Have assurances been sought from relevant third parties in relation to the provenance of the data and circumstances of collection? If scraped data has been used, have measures been undertaken to ensure this method of collection complies with privacy obligations? |

Can obligations in relation to use and disclosure be met? | What is the primary purpose the personal information was collected for? Where the AI-related use is a secondary purpose, what were the reasonable expectations of the individual at the time of collection? What was provided in the APP 5 notification (and privacy policy) to individuals at the time of collection? Have any new notifications been provided? Does consent need to be sought for the secondary AI related use, and/or could a meaningful and informed opt-out be provided as an option? |

Have transparency obligations been met? | Are the privacy policy and any APP 5 notifications clearly expressed and up-to-date? Do they clearly indicate and explain the use of the data for AI training purposes? Have steps been taken to notify affected individuals that their data has been collected? Where data scraping has been used and individual notification is not possible, has general notification been considered? |

Downloads

Updated: 21 October 2024[1] This guidance is intended to apply to all kinds of generative AI, including both MFMs and LLMs. It considers the obligations that apply to organisations that are APP entities under the Privacy Act.

[2] DISR. Safe and responsible AI in Australia: Proposals paper for introducing mandatory guardrails for AI in high-risk settings, September 2024, p 8.

[3] See F Yang et al, GenAI concepts, ADM+S and OVIC, 2024.

[4] For considerations from some other regimes see National Artificial Intelligence Centre, Voluntary AI Safety Standard, DISR, August 2024, pp 11-12; DP-REG, Working Paper 3: Examination of technology – Multimodal Foundation Models, DP-REG, August 2024; DP-REG, Working Paper 2: Examination of technology – Large Language Models, DP-REG, 25 October 2023.

[5] Organisation for Economic Co-operation and Development (OECD), What is AI? Can you make a clear distinction between AI and non-AI systems?, OECD AI Policy Observatory website, 2024.

[6] DISR. Safe and responsible AI in Australia: Proposals paper for introducing mandatory guardrails for AI in high-risk settings, September 2024, p 53.

[7] For further information on how some artificial intelligence terms interrelate see the technical diagram in F Yang et al, GenAI concepts, ADM+S and OVIC, 2024.

[8] DISR, Safe and responsible AI in Australia: Proposals paper for introducing mandatory guardrails for AI in high-risk settings, September 2024.p 54.

[9] DISR, Safe and responsible AI in Australia – Discussion paper, DISR, June 2023, p 5.

[10] DISR, Safe and responsible AI in Australia – Discussion paper, DISR, June 2023, p 5.

[11] For more detail on the process of training generative AI see Bell et al, Rapid Response Information Report: Generative AI - language models (LLMs) and multimodal foundation models (MFMs), 24 March 2023.

[12] DP-REG, Working Paper 3: Examination of technology – Multimodal Foundation Models, DP-REG, August 2024, p 3.

[13] A hallucination occurs where an AI model makes up facts to fit a prompt’s intent – this occurs when an AI system searches for statistically appropriate words in processing a prompt, rather than searching for the most accurate answer: F Yang et al, GenAI concepts , ADM+S and OVIC, 2024. A deepfake is a digital photo, video or sound file of a real person that has been edited to create a realistic or false depiction of them saying or doing something they did not actually do or say: eSafety Commissioner (eSafety), Generative AI – Tech trends and challenges position statement, eSafety, August 2023; eSafety Commissioner (eSafety), Deepfake trends and challenges — position statement, eSafety, May 2020.

[14] Some organisations with an annual turnover of less than $3 million are also subject to the Privacy Act. For example, health service providers and organisations that disclose personal information for a benefit, service or advantage or provide a benefit, service or advantage to collect personal information about another individual from anyone else are subject to the Privacy Act regardless of their turnover. For further information see Privacy Act 1988 (Cth) ss 6D, 6E and 6EA and OAIC, Rights and responsibilities, OAIC, n.d, accessed 9 August 2024.

[15] OAIC, ‘Chapter B: Key concepts’ Australian Privacy Principles guidelines, OAIC, 21 December 2022, [B.11]-[B.25].

[16] Facebook Inc v Australian Information Commissioner (2022) 289 FCR 217 [83]-[87].

[17] Privacy Act 1988 (Cth) APP 1.2.

[18] OAIC, Privacy by design, OAIC, n.d, accessed 19 August 2024.

[19] OAIC, Guide to undertaking privacy impact assessments, OAIC, 2 September 2021.

[20] Electronic Privacy Information Centre, Generating Harms: Generative AI’s Impact & Paths Forward, EPIC, May 2023, pp 24-29.

[21] Electronic Privacy Information Centre, Generating Harms: Generative AI’s Impact & Paths Forward, EPIC, May 2023, p 53; IBM Ethics Board, Foundation models: Opportunities, risks and mitigations [pdf], IBM, February 2024, pp 9, 12.

[22] Data poisoning involves manipulating an AI model’s training data so that the model learns incorrect patterns and may misclassify data or produce inaccurate, biased or malicious outputs: Australian Cyber Security Centre (ASCS), Engaging with Artificial Intelligence, Australian Signals Directorate, 24 January 2024, p. 6.

[23] E Bender et al, ‘On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?’ [conference presentation], ACM Conference on Fairness, Accountability, and Transparency, 3-10 March 2021, pp 616-617.

[24] I Shumailov et al, ‘AI models collapse when trained on recursively generated data’, Nature, 2024, 631:755-759.

[25] Note that although AI poses significant issues in relation to explainability, these are not covered more broadly in this guidance which focuses on the privacy risks of generative AI.

[26] Organisation for Economic Development (OECD), ‘AI, data governance, and privacy: Synergies and areas of international co-operation’, OECD Artificial Intelligence Papers, No. 22, OECD, June 2024 p. 21. For more on de-identification see OAIC, De-identification and the Privacy Act, OAIC, 21 March 2018.

[27] IBM Ethics Board, Foundation models: Opportunities, risks and mitigations [pdf], IBM, February 2024, pp 12-13.

[28] The eSafety Commissioner (eSafety) is Australia’s independent regulator and educator for online safety. eSafety provides more information on the online safety risks and harms of generative AI. eSafety Commissioner (eSafety), Generative AI – Tech trends and challenges position statement , eSafety, August 2023.

[29] A number of these issues are directly relevant to other areas of law. For considerations from some other regimes see National Artificial Intelligence Centre, Voluntary AI Safety Standard, DISR, August 2024, pp 11-12; DP-REG, Working Paper 3: Examination of technology – Multimodal Foundation Models, DP-REG, August 2024; DP-REG, Working Paper 2: Examination of technology – Large Language Models, DP-REG, 25 October 2023.

[30] G Bell et al, Rapid Response Information Report: Generative AI - language models (LLMs) and multimodal foundation models (MFMs), 24 March 2023; National Cyber Security Centre (UK), ChatGPT and large language models: what’s the risk?, 14 March 2023.

[31] G Bell et al, Rapid Response Information Report: Generative AI - language models (LLMs) and multimodal foundation models (MFMs), 24 March 2023; Confederation of European Data Protection Organizations, Generative AI: The Data Protection Implications, 16 October 2023, p 12.

[32] For considerations when entities deploy or use commercial AI systems see to OAIC guidance on privacy and entities using commercially available AI products.

[33] E Bender et al, ‘On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?’ [conference presentation], ACM Conference on Fairness, Accountability, and Transparency, 3-10 March 2021.

[35] I Shumailov et al, ‘AI models collapse when trained on recursively generated data’, Nature, 2024, 631:755-759; OECD, ‘AI, data governance, and privacy: Synergies and areas of international co-operation’ p. 21.

[36] See discussion of capability in Y Benigo et al, ‘International Scientific Report on the Safety of Advanced AI: Interim Report’, DIST research paper series, number 2023/009, 2024 pp21-22, 26-27.

[37] OAIC, ’Chapter 10: APP 10 Quality of personal information’, Australian Privacy Principles guidelines, OAIC, 22 July 2019 [10.6].

[38] eSafety Commissioner (eSafety), Generative AI – Tech trends and challenges position statement, eSafety, August 2023.

[39] Both structured and unstructured data should be considered.

[40] OAIC, What is personal information?, OAIC, 5 May 2017.

[41] Researchers have demonstrated that while data sanitisation is useful in limited cases, such as for the removal of ‘context-independent’ or ‘well-defined’ personal information from a dataset (e.g. certain government identifiers), it may have limitations in recognising non-specific textual personal information (e.g. messages that reveal sensitive personal information) - Brown et al, ‘What Does it Mean for a Language Model to Preserve Privacy?’ (20 June 2022) FAccT '22: Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, 2, 10-11. For information on de-identification in the context of the Privacy Act see OAIC, De-identification and the Privacy Act, OAIC, 21 March 2018.

[42] OAIC, ’Chapter 6: APP 6 Use or disclosure of personal information’, Australian Privacy Principles guidelines, OAIC, 22 July 2019, [6.22], [6.25], [6.28].

[43]Privacy Act 1988 (Cth) APP 3.2; OAIC, ‘Chapter 3: APP 3 Collection of solicited personal information’, Australian Privacy Principles guidelines, OAIC, 22 July 2019, [3.17]-[3.21]; OAIC, ’Chapter 6: APP 6 Use or disclosure of personal information’, Australian Privacy Principles guidelines, OAIC, 22 July 2019, [6.21].

[44] Privacy Act 1988 (Cth) APP 3.6. For a discussion of this principle in a different context see K Kemp, Australia’s Forgotten Privacy Principle: Why Common ‘Enrichment’ of Customer Data for Profiling and Targeting is Unlawful, Research Paper, 20 September 2022.

[45] OAIC, ‘Chapter 3: APP 3 Collection of solicited personal information’, Australian Privacy Principles guidelines, OAIC, 22 July 2019, [3.61].

[46] OAIC, ‘Chapter 3: APP 3 Collection of solicited personal information’, Australian Privacy Principles guidelines, OAIC, 22 July 2019, [3.62].

[47] OAIC, ‘Chapter 3: APP 3 Collection of solicited personal information’, Australian Privacy Principles guidelines, OAIC, 22 July 2019, [3.62].

[48] See discussion of whether data scraping is lawful and fair in 'AHM' and JFA (Aust) Pty Ltd t/a Court Data Australia (Privacy) [2024] AICmr 29 and Commissioner initiated investigation into Clearview AI, Inc. (Privacy) [2021] AICmr 54.

[49] Privacy Act 1988 (Cth) s 6(1), definition of sensitive information.

[50] OAIC, ‘Chapter B: Key concepts’, Australian Privacy Principles guidelines, OAIC, 21 December 2022, [B.142].

[51] Privacy Act 1988 (Cth) APP 3.3.

[52] Privacy Act 1988 (Cth) s 6(1), definition of consent.

[53] OAIC, ‘Chapter B: Key concepts’, Australian Privacy Principles guidelines, OAIC, 21 December 2022, [ B.39]-[B.40].

[54] OAIC, ‘Chapter B: Key concepts’, Australian Privacy Principles guidelines, OAIC, 21 December 2022, [B.42].

[55] Although not the focus of this guidance, the third party providing the dataset will also need to consider whether they are complying with their privacy obligations.

[56] Privacy Act 1988 (Cth) s 6(1), definition of ‘holds’.

[57] Privacy Act 1988 (Cth) s 6(1), definition of ‘record’.